Task 3: Word sense disambiguation

Task definition

In Natural Language Processing, Word Sense Disambiguation is the problem of identifying which sense (meaning) of a word is used in a particular context. Word Sense Disambiguation is still an open NLP problem mainly because of the lack of large-scale sense-annotated training corpora, especially for under-resourced languages. The available training resources (e.g. SemCor) have been usually accused of having scarce data to train a good supervised model (due to the highly imbalanced data and most frequent sense bias). Typically, there are three main approaches to WSD:

- supervised models trained on large sense-annotated corpora,

- knowledge-based approaches that use the properties of available language resources (wordnets, thesauri, ontologies),

- weakly supervised approaches (semi-supervised learning) that use both labeled and unlabeled data by applying bootstrapping methods, starting from a small data seed to train a model, and then applying the model on unlabeled samples to obtain more training data.

Despite being very successful, supervised approaches have a limited coverage of the vocabulary and deal only with a small narrow subset of senses.

Common approaches to WSD:

- Lesk Algorithm

- Distributional Lesk

- Personalized PageRank

- Neural Models

- Semi-supervised Learning with Neural Models

Examples

“To zagadnienie należy do **problemów** NP-trudnych, których...”

“Ogrzewanie mieszkania w zimie trochę kosztuje, bo mamy **piec** elektryczny.”

Development Data for the Competition

Fixed competition

The participants can only use the following resources:

- Słowosieć - plWordNet (Ad 2. Eval. Proc. and Data Format),

- Usage examples and glosses attached to plWordNet senses (Ad 1. in Eval. Proc. and Data Format),

- Unstructured textual corpora (no sense annotations!).

- Please do not use sense annotated corpora (e.g. Składnica)

All the data is available under following URL:

https://gitlab.clarin-pl.eu/ajanz/poleval20-wsd

Open competition

- It is allowed to use any useful knowledge sources and expansions for the purpose of this competition: plWordNet links to Wikipedia, Ontologies (SUMO, YAGO), existing thesauri, valency dictionaries (Walenty) etc.

- It is also allowed to use sense annotated corpora (e.g. using Składnica to train or tune the model).

The other part of our development data for testing the proposed solutions contains a small collection of manually annotated documents from Składnica (also available at https://gitlab.clarin-pl.eu/ajanz/poleval20-wsd). To evaluate the performance, we usually compute the precision and recall (section Evaluation Measure).

Evaluation Measure

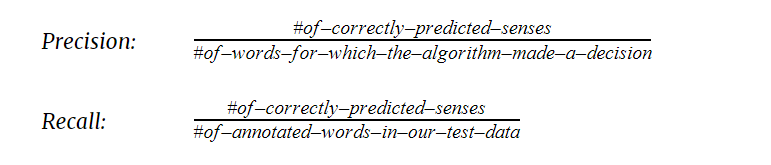

To evaluate the model we use precision and recall metrics computed in a following way:

Test Data

UPDATED ON 21.08.2020!

The final test data is available here for download: testdata.7z. It contains the following:

- Manually annotated corpus of Sherlock Holmes stories.

- Manually annotated part of KPWr corpus.

Please see the description of the data availabla here:

https://gitlab.clarin-pl.eu/ajanz/poleval20-wsd/-/blob/master/README.md

Evaluation Procedure and Data Format

We provide two initial knowledge sources for the task of word sense disambiguation:

Ad 1. plWordNet glosses and usage examples

Textual glosses and usage examples assigned to plWordNet senses. A provided textual file contains synset identifiers (our classes) with their short definitions and usage examples (if available). The words representing synsets in usage examples were marked with a double star ** symbol. The definitions may not contain any marked word (the described word does not exist in the definition).

synset_defs_examples.txt ======================== s1319 "Ten **piec** z łatwością ogrzeje całe mieszkanie do 80m2." s1319 "Podstawowy element instalacji grzewczej..." s789 "To zagadnienie należy do **problemów** NP-trudnych, których..."

Ad 2. The lexico-semantic structure of plWordNet

The lexico-semantic structure of plWordNet (lexico-semantic links between senses and synsets). The lexico-semantic structure of plWordNet was prepared as a collection of textual files representing lemmas, lexical units (senses), synsets (set of synonymous senses), and lexico-semantic relations.

A) This file represents all lemmas and their Part-of-Speech:

lemmas.txt ========== 1 piec,noun 2 urządzenie_grzewcze,noun 3 czerwona_kartka,noun 4 wypić,verb 5 szybko,adv 6 prędko,adv 7 superszybko,adv 8 piec,verb ...

B) This file represents all possible synsets (recognized classes!):

synsets.txt =========== 1 s10 2 s11 3 s12 ...

C) This file represents all lexical units (senses) for all existing lemmas in plWordNet. The file is a simple mapping between lemmas.txt and synsets.txt (lexical units are represented by their lemma, PoS, and synset they belong to). The mapping joins rows from lemmas.txt with the rows from synsets.txt to represent unique senses:

lexicalunits.txt

=================

1 1 // this means, that there is a lexical unit piec.noun

// (lemma and PoS, first row from lemmas.txt file)

// that belongs to synset identified as s10

// (first row in synsets.txt)

2 1 // this lexical unit (urządzenie_grzewcze.noun)

// belongs to the same synset s10, thus it’s a synonym

5 2 // the lexical unit (szybko.adv) belongs to synset s11

6 2 // this lexical unit (prędko.adv) belongs to s11, so

// it’s a synonym of(szybko.adv)

D) This file represents all semantic relations between plWordNet synsets (it is based on synsets.txt file, e.g. 1st row of synsets.txt is linked with 2nd row of synsets.txt):

synset_rels.txt

==============

1 2 hyponymy // synset s10 is linked with s11 (synsets.txt file) by

// hyponymy relation s10 -[hyponymy]-> s11

2 1 hypernymy // reverse relation s11 -[hypernymy]-> s10

...

E) This file describes lexico-semantic relations between plWordNet senses (it is based on lexicalunits.txt file, e.g. 3rd row of lexicalunits.txt is linked with 5th row of lexicalunits.txt):

lexicalunit_rels.txt =================== 3 5 stopnie // szybko.adv.s11 -[stopnie]-> superszybko.adv.s12 ...

Final format description (explained visually):

1)

lemmas.txt lexicalunits.txt synsets.txt ========== ================ =========== piec,noun (*) 1 1 s10 (*) urządz._grzewcze,noun (*) 2 1 s11 (^) czerwona_kartka,noun 5 2 s12 ($) wypić,verb 6 2 ... szybko,adv (^) 7 3 prędko,adv (^) ... superszybko,adv ($) piec,verb ...

2)

lexicalunit_rels.txt lexicalunits.txt

==================== ================

3 5 (%) 1 1

2 1

5 2 (%)

6 2

7 3 (%)

3)

synset_rels.txt synsets.txt

============== ================

1 2 (#) s10 (#)

s11 (#)

s12

Ad 3. Sense Annotated Treebank - Składnica

We provide a manually annotated treebank with sense annotations (http://zil.ipipan.waw.pl/Składnica) as a final part of our development data. The treebank was automatically converted to CCL format (http://nlp.pwr.wroc.pl/redmine/projects/corpus2/wiki/CCL_format) which is a simplified XML format for digital text representation. The format specifies the information about text segmentation (<chunk>, <sentence>, <token>), lemmatisation (<base>), morphosyntactic metadata (<ctag>), as well as the metadata with user-specified properties (<prop>). In the case of WSD, we use our custom property to assign correct synset identifiers to tokens (“sense” property key). The simplified treebank with sense annotations (skladnica-ccl.7z) is available at: https://gitlab.clarin-pl.eu/ajanz/poleval20-wsd.

...

<sentence id="s7">

<tok>

<orth>Jak</orth>

<lex><base>jak</base><ctag>padv</ctag></lex>

</tok>

<tok>

<orth>się</orth>

<lex><base>się</base><ctag>qub</ctag></lex>

</tok>

<tok>

<orth>ją</orth>

<lex><base>on</base><ctag>ppron3:sg:acc:f:ter:akc:npraep</ctag></lex>

</tok>

<tok>

<orth>trenuje</orth>

<lex><base>trenować</base><ctag>fin:sg:ter:imperf</ctag></lex>

<prop key="sense">54346</prop>

</tok>

<ns/>

<tok>

<orth>?</orth>

<lex><base>?</base><ctag>interp</ctag></lex>

</tok>

</sentence>

<sentence id="s8">

<tok>

<orth>Uczy</orth>

<lex><base>uczyć</base><ctag>fin:sg:ter:imperf</ctag></lex>

<prop key="sense">1449</prop>

</tok>

<tok>

<orth>się</orth>

<lex><base>się</base><ctag>qub</ctag></lex>

</tok>

...